The fifth research intensive took place between 4 – 8 August in Bedford. This week-long laboratory included the following project participants: Helen Bailey, Michelle Bachler, Anje Le Blanc and Andrew Rowley. We were joined by Video Artist: Catherine Watling, and four Dance Artists: Catherine Bennett, River Carmalt, Amalia Garcia and James Hewison. There were several aims for this lab: 1. To explore, in a distributed performance context, the software developments that have been made to Memetic and the AG environment for the manipulation of video/audio streams. 2. To integrate other technologies/applications  into this environment. 3. To initiate developments for Compendium. 4. Explore the compositional opportunities that the new developments offer.Â

In terms of set-up this has been the most ambitious research intensive of the project. We were working with four dancers distributed across two networked theatre spaces. For ease we were working in the theatre auditorium and theatre studio at the University. Both of which are housed within the same building. This provided the opportunity for emergency dashes between the two locations if the technology failed. We worked with three cameras in each space for live material and then pre-recorded material in both locations. Video Artist Catherine Watling worked with us during the week to generate pre-recorded, edited, video material that could then be integrated into the live context.

1. Software developments in a distributed environment

The significant developments from my perspective here was firstly the the ability of the software to remember the layout of projected windows from one ‘scene’ to another. This allowed for a much smoother working process and for the development of more complex compositional relationships between images . The second significant development was the transparency of overlaid windows which allows for the creation of composite live imagery across multiple locations.

What’s really interesting is that the software development is at the stage where we are just beginning to think about user interface design.  During the week we looked at various applications to assess the usefulness of the interface or elements of the design for e-Dance. We focused on Adobe Premiere, Isadora, Arkaos, Wizywyg and Powerpoint. These all provided useful insights into where we might take the design.Â

2. Integration of other technologies

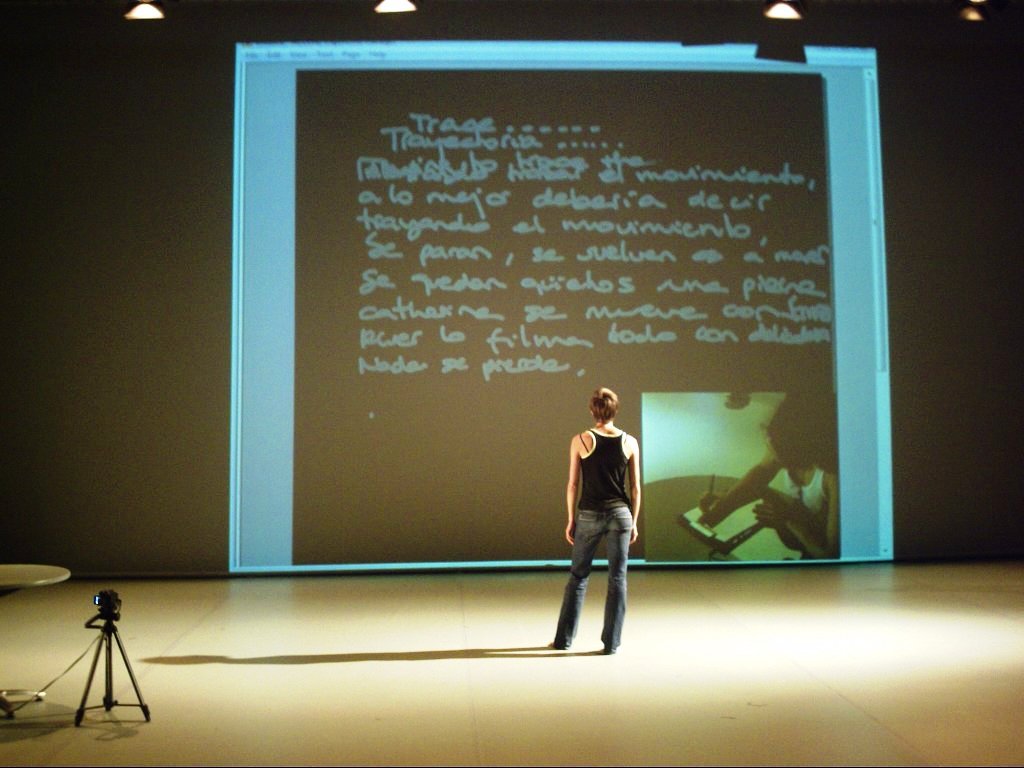

We had limited opportunity to integrate other technology and applications during the week. I think this is better left until the software under development is more robust and we have a clear understanding of its functionality. We did however integrate the Acecad Digi Memo Pads into the live context as graphic tablets. I was first introduced to these by those involved in the JISC funded VRE2 VERA Project running concurrently with e-Dance. This provided an interesting set of possibilities both in terms of operator interface and also the inclusion of the technology within the performance space to be used directly by the performers.

3. Begin Compendium development

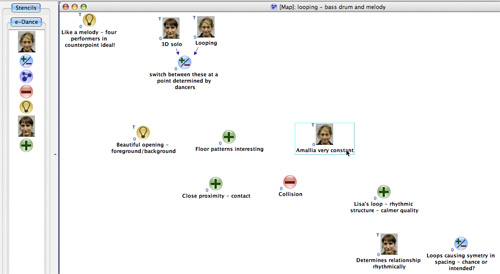

The OU software development contribution to the project began in earnest with this intensive. Michelle was present throughout the week, which gave her the opportunity to really immerse herself in the environment and gain some first-hand experience of the choreographic process and the kinds of working practices that have been adopted by the project team so far.

Throughout the week Michelle created Compendium maps for each day’s activity. It became clear that the interface would currently militate against the choreographic process we are involved in. So having someone dedicated to the documentation of the process was very useful. It also gave Michelle first-hand experience of the problems. The choreographic process is; studio-based, it is dialogic in terms of the construction of material between choreographer and dancers, it involves the articulation of ideas that are at the edges of verbal communication and judgements are tacitly made and understood. Michelle’s immediate response to this context was to begin to explore voice-to-text software as a means of mitigating some of these issues.

The maps that were generated during the week are really interesting in that they have already captured thinking, dialogue and decision-making within the creative process that would previously have been lost.  The maps also immediately raise the question about authorship and perspective. The maps from the intensive had a single author, they were not collaboratively constructed, they represent a single perspective on a collaborative process. So over the next few months it will be interesting to explore the role/function of collaboration in terms of mapping the process – whether what we should aim for is a poly-vocal single map that takes account of multiple perspectives or an interconnected series of individual authored maps will need to be considered.

4. Compositional developments

Probably influenced by the recent trip to ISEA (the week before!), the creative or thematic focus for the laboratory was concerned with spatio-temporal structure again but specifically location. I began with a series of key terms that clustered around this central idea – they were; dislocate, relocate, situate, resituate, trace, map. A series of generative tasks were developed that would result in either live/projected material or pre-recorded video material. This material was then organised or rather formed the basis of the following sections of work-in-progress material:

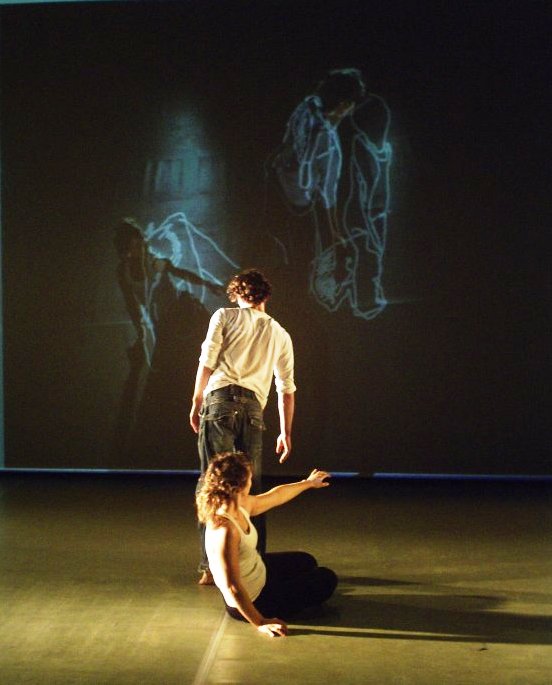

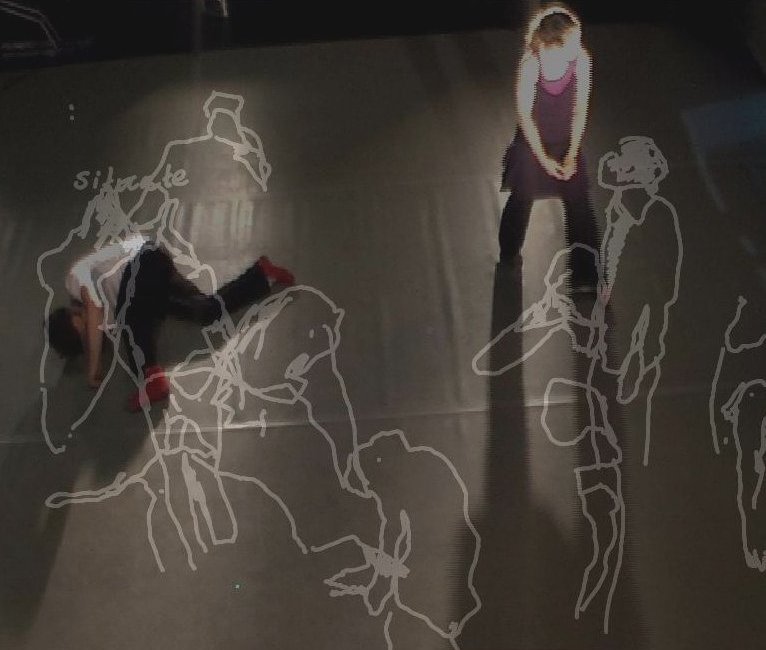

Situate/Resituate Duet (Created in collaboration with and performed by River Carmalt and Amalia Garcia) (approx. 5 minutes)

Â

Â

The duet was constructed around the idea of ‘situating’ either part of the body or the whole body, then resituating that material either spatially, or onto another body part or onto the other dancer’s body. We used an overhead camera to stream this material and then project it live in real-time to scale on the wall of the performance space. This performed an ambigious mapping of the space.Â

Â

Â

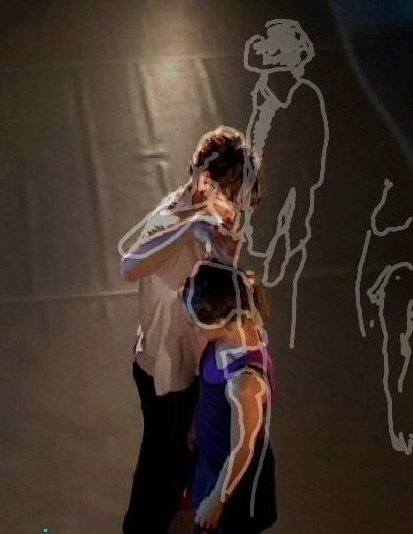

The Situate/Resituate Duet developed through the integration of the Digi Memo Pads into the performance context. James and Catherine were seated at a down-stage table where they could be seen drawing. The drawing was projected as a semi-transparent window over the video stream. This allowed them to directly ‘annotate’ or graphically interact with the virtual performers.Â

Â

Â

Auto(bio/geo) graphy (Created in collaboration with and performed by Catherine Bennett, River Carmalt, Amalia Garcia, James Hewison. Filmed and edited by Catherine Watling) (4 x approx. 2-minute video works)

In this task we integrated Googlemaps into the performance context as both content and scenography. We used a laptop connected to Googlemap on stage and simultaneously projected te website onto the back wall of the performance space.  The large projection of the satellite image of the earth resituated the performer into a extra-terrestrial position.Â

Each performer was asked to navigate Googlemap to track their own movements in the chronological order of their lives. They could be as selective as they wished whilst maintaining chronological order. This generated a narrativised mapping of space/time. This task resulted in a series of edited 2-minute films of each performer mapping their chronological movements around the globe. Although we didn’t have the time within the week to utilise these films vis-a-vis live performance, we have some very clear ideas for their subesquent integration at the next intensive.Â

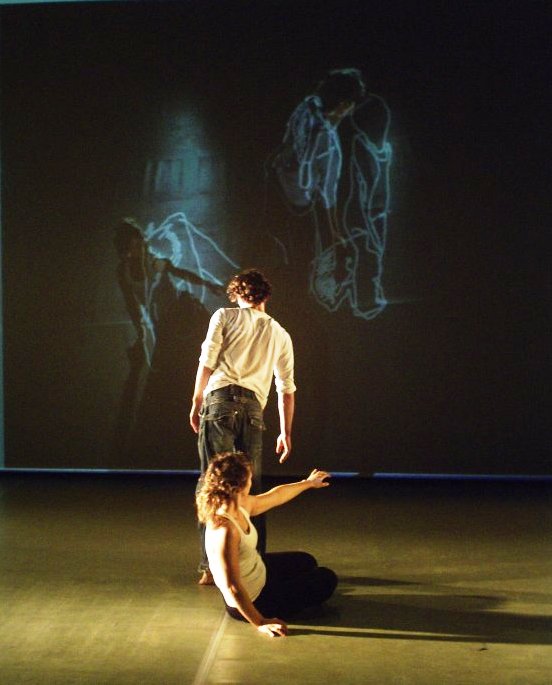

Dislocate/Relocate: Composite Bodies (Created in collaboration with and performed by Catherine Bennett, River Carmalt, Amalia Garcia, James Hewison) (approx. 4-minutes)

This distributed quartet was constructed across two locations. The ideas of dislocation and relocation through a fragmentation of the four performers’ bodies and then reconstituting two composite virtual bodies from fragments of the four live performers.Â

We began with the idea of attempting to ‘reconstruct’ a coherent singluar body from the dislocated/relocated bodily fragments. Then we went on to explore the radical juxtaposition created by each fragmentary body part moving in isolation.

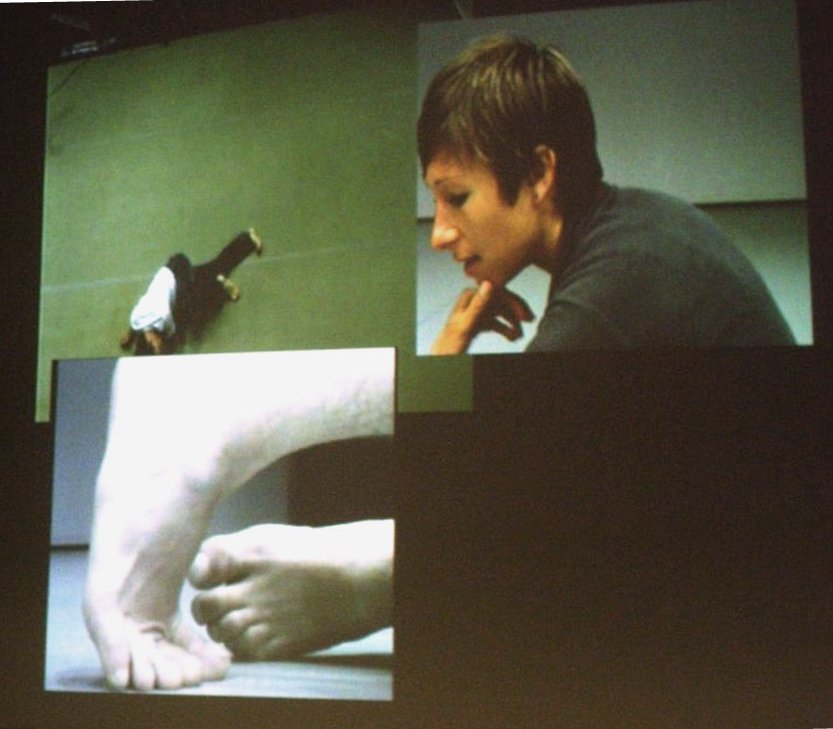

“From Here I Can See…” (A Distributed Monologue)Â (Created in collaboration with and performed by Catherine Bennett, River Carmalt, James Hewison) (approx. 5 -minutes)

This distributed trio was initially focused on the construction of a list-based monologue in which the sentence “From here I can see…” was completed in a list form that functioned through a series of associative relationships.

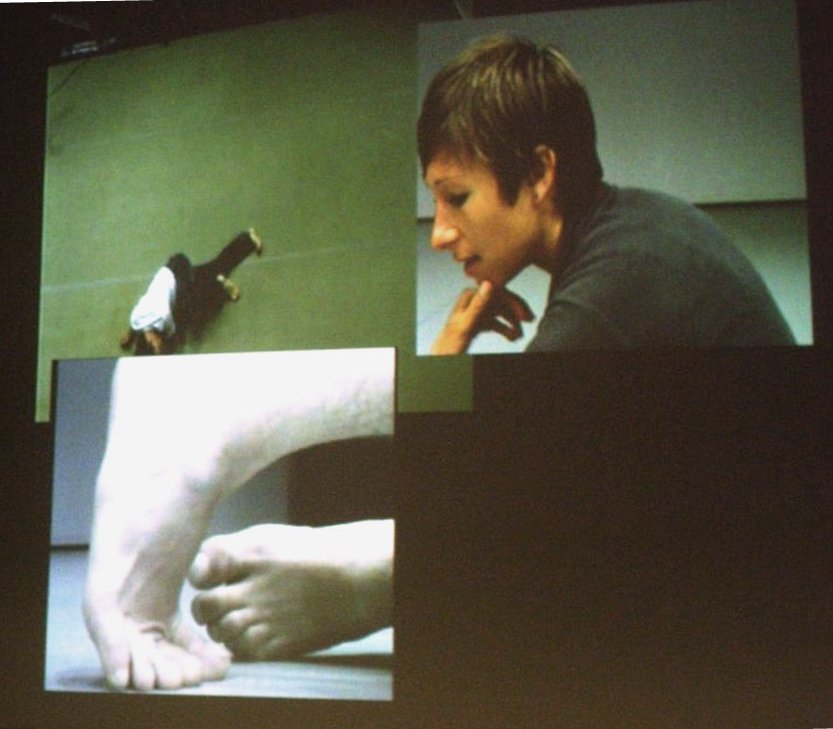

In one location Catherine delivered a verbal monologue, another dancer performed a micro-gestural solo with his feet within the same location as Catherine. A third non-co-located dancer in the other space performed a floor based solo.Â

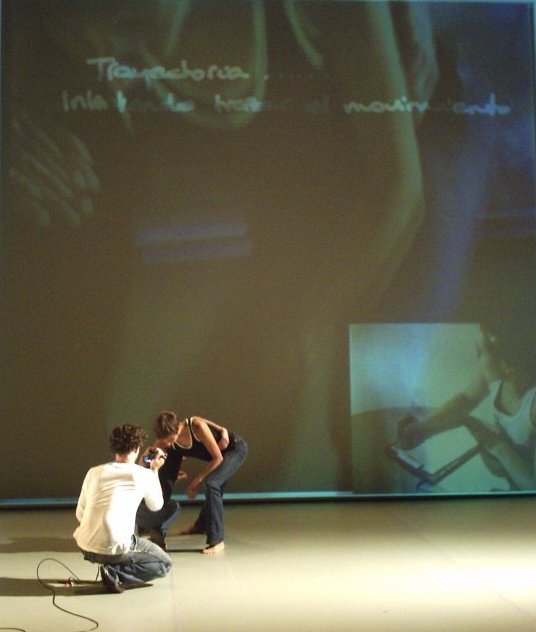

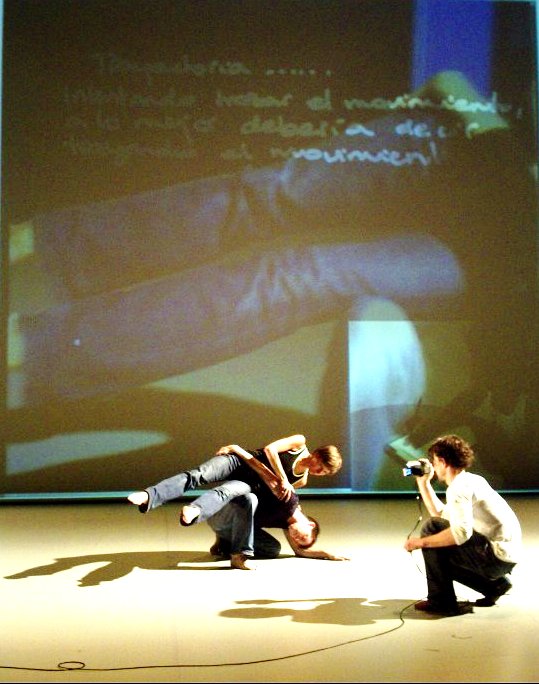

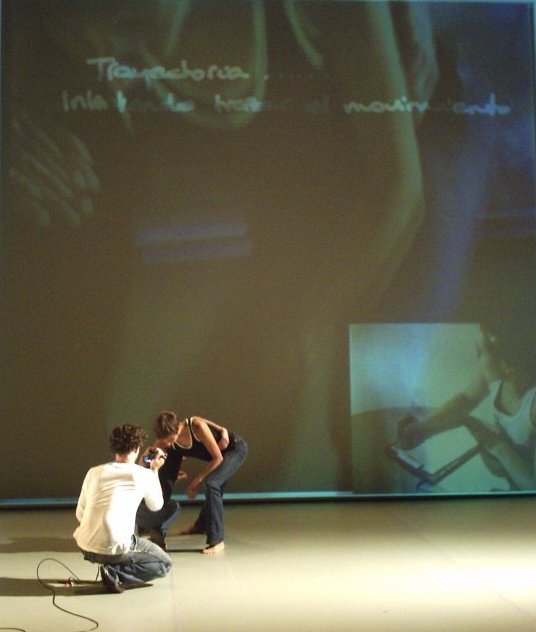

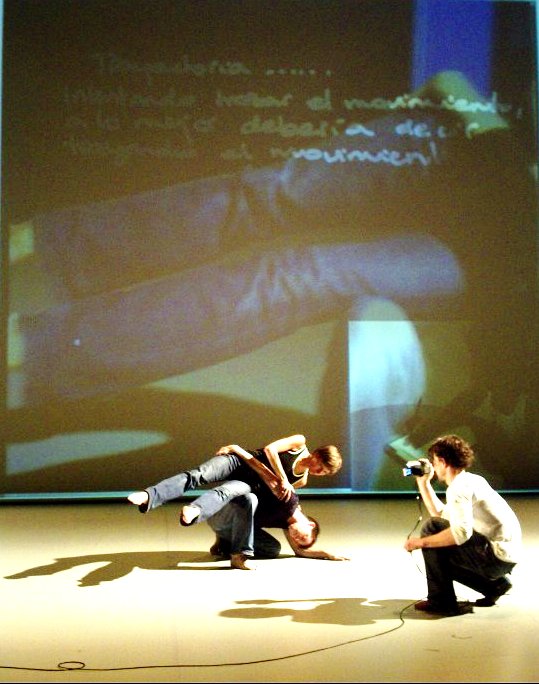

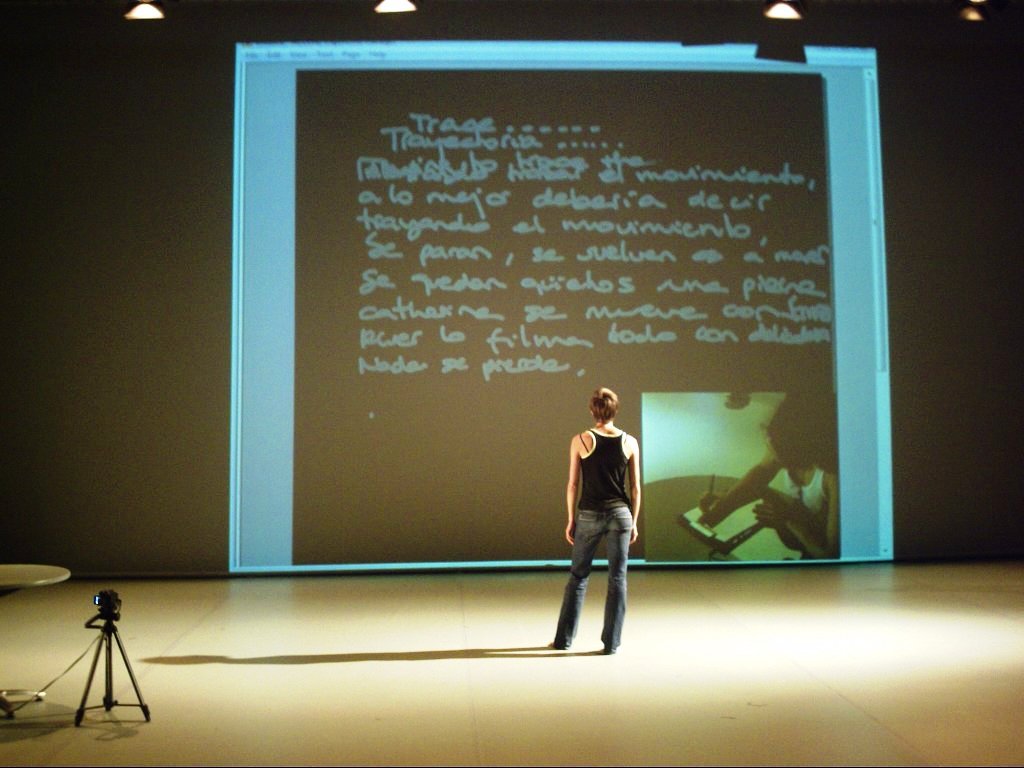

Trace Duet (Created in collaboration with and performed by Catherine Bennett and James Hewison)

In this duet we focused on bringing togther the new software capability to produce layered transparency of windows and the use of the Acecad Digi Memo Pad as graphics tablet. We also worked with a combination of handheld and fixed camera positions.

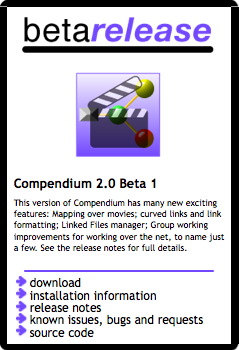

Hurrah! All of the extensions that we added to Compendium during the e-Dance project have now been folded into the new

Hurrah! All of the extensions that we added to Compendium during the e-Dance project have now been folded into the new