22

02

2008

[Simon / Open U writes…] — We’ve just completed a 2-day practicum in Manchester, continuing our experiments to understand the potential of Memetic’s replayable Access Grid functionality for choreographic rehearsal and performance.

Two AG Nodes (i.e. rooms wired for sound and large format video) were rearranged for dance, clearing the usual tables and chairs. With the Nodes connected, a dancer in each Node, and Helen as choreographic researcher co-located with each in turn, we could then explore the impact of different window configurations on the dancers’ self image, as well as their projected images to the other Node, and mixing recorded and live performance.

Helen will add some more on the choreographic research dimensions she was exploring. My interest was in trying to move towards articulating the “design space†we are constructing, so that we can have a clearer idea of how to position our work along different dimensions. (Reflecting on how our roles are playing out as we figure out how to work with each other is of course a central part of the project…)

To start with, we can identify a number of design dimensions:

- synchronous — asynchronous

- recorded — live (noting that ‘live’ is a problematic term now: Liveness: Performance in an Mediatized Culture by Philip Auslander)

- virtual — physical

- modality of annotation: spoken dialogue/written/mapped

- AG as performance environment vs. as rehearsal documentation context

- AG as performance environment enabling traditional co-present choreographic practices — or as a means of generating/enabling new choreographic practices

- documenting process in the AG — ‘vs’ the non-AG communication ecology that emerges around the e-dance tools (what would a template for an edance–based project website look like, in order to support this more ‘invisible’ work?)

- deictic annotation: gesture, sketching, highlighting windows

- in-between-ness: emergent structures/patterns are what make a moment potentially interesting and worth annotating, e.g. the relationships between specific video windows

- continuous — discontinuous space: moving beyond geometrical/Euclidean space

- continuous — discontinuous time: moving beyond a single, linear time

- framing: aesthetic decisions/generation of meaning – around the revealing of process. Framing as in ‘window’ versus visual arts sense

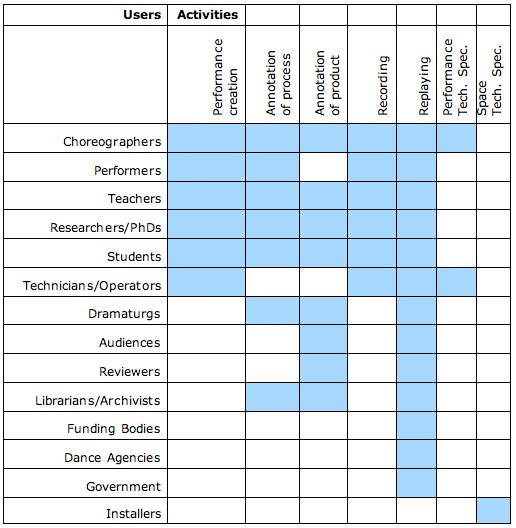

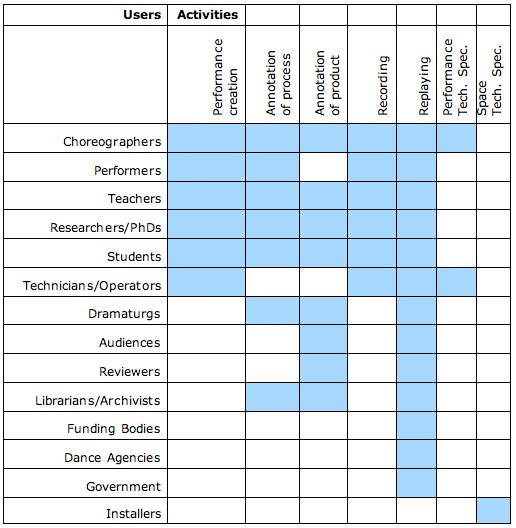

Bringing to bear an HCI orientation, an initial analysis of the user groups who could potentially use the e-Dance tools, and the activities they might perform with them, yielded the following matrix:

We will not be working with all of these user communities of course, but the in-depth work we do can now be positioned in relation to the use cases we do not cover.

Comments : 1 Comment »

Categories : AG, Event

1

02

2008

Today and yesterday Anja, Helen, Sita and myself have been getting into the nitty-gritty of the eDance project software requirements in Manchester. Helen and Sita arrived (after what sounded a monumental train journey for Helen!) and we got straight into discussing their experience of using the mish-mash of software we have given them so far! Of course, this software hadn’t been working 100% smoothly (as it was being used in a context it had not been conceived for – namely all running on one machine without a network). However, they had managed to get some useful recordings which we had they had sent to us, and we had already imported them onto our local recording server before they arrived.

We started by discussing what Helen and Sita found was missing from the existing recordings. This included things like the fact that the windows all looked like windows (i.e. had hard frames) which made it hard to forget that there was a computer doing the work. This was expanded with further windowing requirements, like window transparency and windows with different shapes, which would help allow more free layouts of the videos. We quickly realised that this could also help in a meeting context, as it would help AG users forget that they are using computers and just get on with the communication.Â

We also discussed having a single window capable of displaying different videos; this could make it look better in a performance context, where you wouldn’t want to see the movement of the windows, but want to change between videos. It was also desirable to split up the recorded video into separate streams that could be controlled independantly. This would allow different parts of different recordings to be integrated. This would also require the ability to jump between recordings, something that the current software does not allow.

We moved on to talk about drawing on videos. This would allow a level of visual communication between the dancers and choreographers, which can be essential to the process; it was mentioned earlier that much of the communication is visual (e.g. “do this” rather than “move from position A to position B”). Drawings on the videos would enable this type of communication – although for effective communication, the lines would need to be reproduced as they are drawn, rather than just the line (i.e. the movement of the drawing, not just the result). We realised that there was a need to have tools for the lines, as you may want lines that stay for the duration of the video and lines that disappear after some predetermined interval (and of course a clear function to remove lines).

We finally discussed how all this would be recorded, so that it could be replayed either during a live event or during another recording, including the movement of windows and drawings on the screen. We realised that this would need a user interface. This is where we hit problems, as we found that it would be complicated to represent the flow through the videos. We realised that this may be related to the work on Compendium – this is where we left this part as Simon was not present to help out with this!

Comments : No Comments »

Categories : Event, Manchester Jan/Feb 2008, Software, Team

1

02

2008

Sita and I have spent the last two days with Andrew and Anja in Manchester. The aim of the workshop was to revisit the video stream material from the first workshop in January and begin to look at the possibilities that this material might provide compositionally.

In the first workshop, we were testing the system and generating material for the windows. We were exploring what the system offered creatively that standard video cameras don’t.

Yesterday we looked at Memetic as a playback system. We asked ourselves what Memetic and AG offer as a performance environment. How do we play with recorded material in this environment? How do we navigate and compose video stream material? What does the system permit/enable in terms of the relationship between live and streamed material?Â

Most of the first day was discursive and playing with the video streams. Initially we watched the video, but gradually they became background information from which we extrapolated the kinds of developments that we need to move forward. We started to construct a ‘to do’ list for Anja and Andrew.

We began with the question: what can we do with this environment now? Picking up on the idea of the window/frame from the first workshop, we translated that into some practical develoment needs with Anja and Andrew. For instance, we were particularly concerned with the properties of the windows, their animation, and their presentation for a performative context.

On the second day, we experimented with the potential for layering of material via multiple recordings. We took some of the video streams that we recorded at the first choreographic workshop and played them back through memetic, whilst simultaneously recording live new material improvised in response to it by Helen. This gave us two layers of material. It also provided some interesting ideas about the mediatisation of space, not only between the windows but also experientially by the live performer who is performing improvisationally to their body presented in a fragmentary manner through the live windows. We then went through the cycle a third time, using the first two sets of recorded material and improvising a third gestural response in a new set of windows. Operator control of the streamed videos allowed a playful approach to highlight the differences between the pre-recorded and the live by jumping around through the video streams in performance.

Comments : No Comments »

Categories : Event, Manchester Jan/Feb 2008

1

02

2008

The great goal of the last two days was to finally find out what we are supposed to develop in the next year. Well — we done it. There is a list – even prioritised - so we could say we had a successfull time, but besides it was a lot of fun.

Helen and Sita came up yesterday and we reviewed some of the recordings of the last workshop in Bedford. There is lots of material. For someone who was not in Bedford at the time of the recording I felt what I not  getting the full picture, but of course I was not supposed to – that is the artist side of it.

Interestingly, we took the recorded material and Helen did some improvisations to it – and of course we recorded both the original material and the new video streams. To complicate things even more the next stage is replaying the newly recorded material and add some more video feeds to it – Helen moves through the times.

Placing of video windows on the screen is it own art work. (Something we will support during the project.) Sita took up this important task and moved and re-sized them very professionally. At this sage of the project we just have some `old fashioned’ photographs to show for it. I wonder how somebody else, taking our video streams, would  reshape the space. The impression will be completely different.

Comments : No Comments »

Categories : Event, Manchester Jan/Feb 2008